PBSE: Personalized Bookmark Search Engine

I led a team to develop a Google Chrome browser extension with the idea of Intelligent Browsing by implementing the “bookmark-and-search” feature to address the searching difficulty and increase the accessibility of the bookmark functionality. This was developed as the final project for the course CS 410: Text Information Systems (Fall 2021) by Prof. ChengXiang Zhai at University of Illinois at urbana-Champaign.

Introduction

Most modern internet browsers, such as Google Chrome, now have a bookmark feature that allows users to save website URLs for future reference. If the bookmark list grows longer over time, users may find it difficult to access the relevant stuff they want. In this project, we create the personalized bookmark search engine as a Google Chrome browser extension with the idea of Intelligent Browsing, where we implement the “bookmark-and-search” feature, to address the searching difficulty and increase the accessibility of the bookmark feature. The plugin allows users to browse the web intelligently, and gives more accurate search results of relevant materials by going deeper into the website content rather than simply looking at the URL and title of a web page.

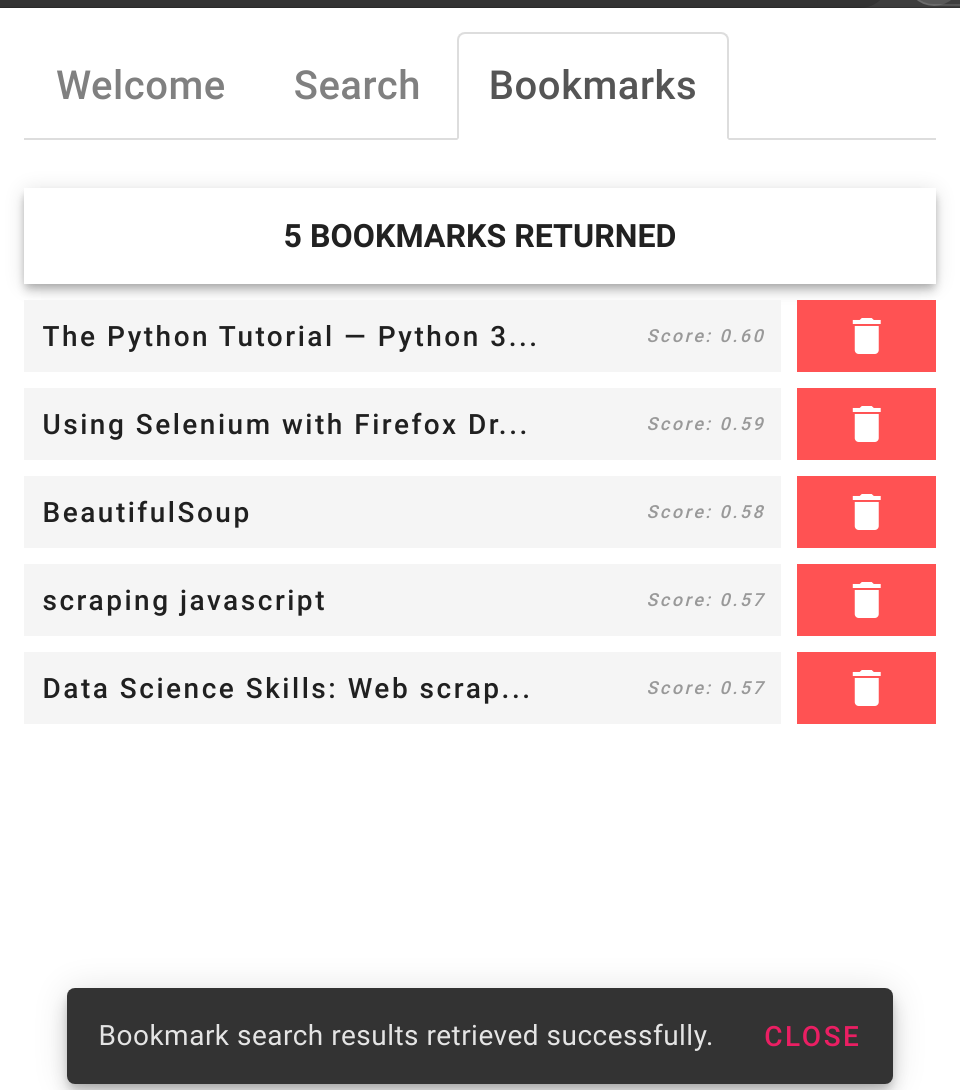

Frontend

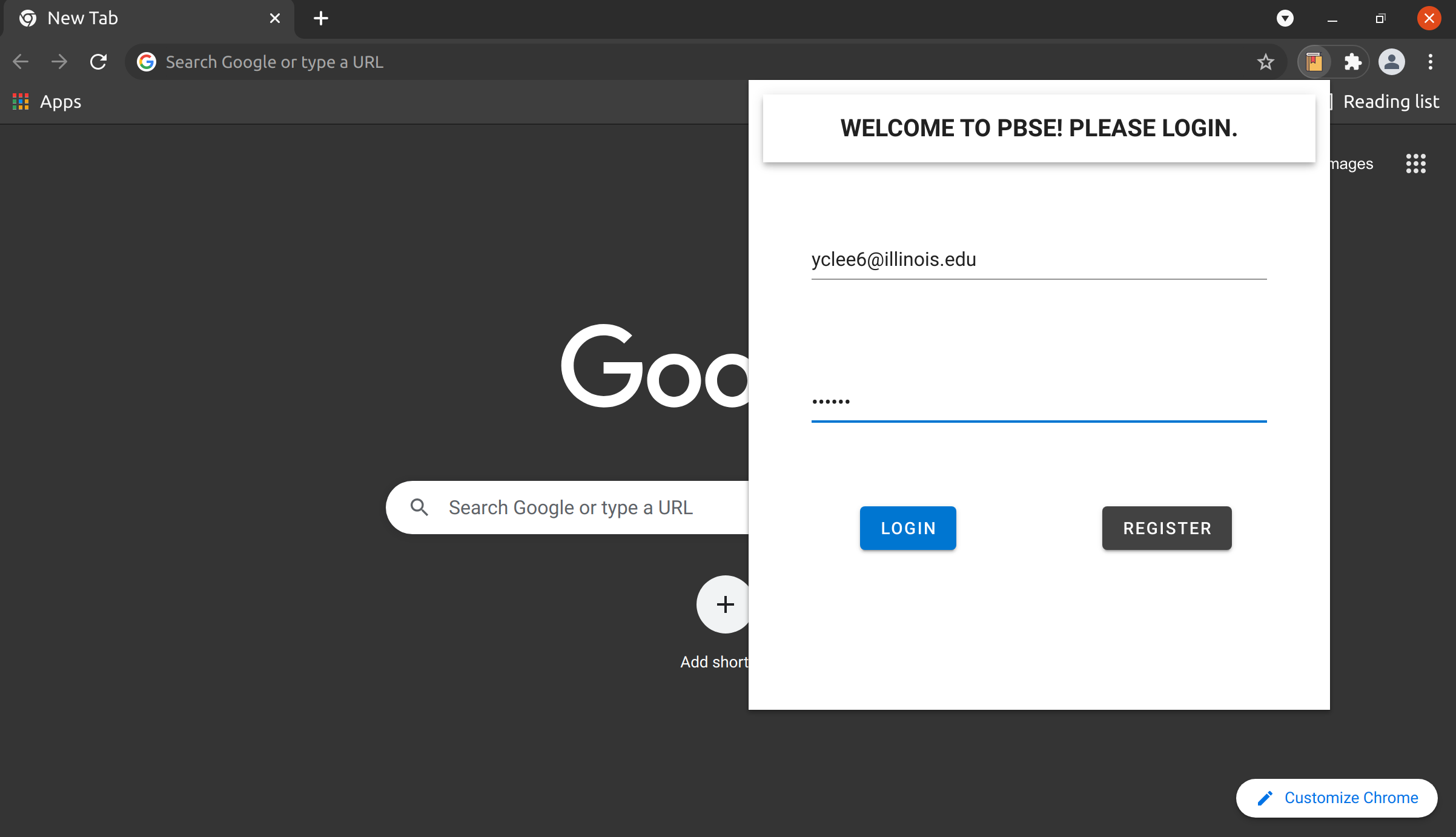

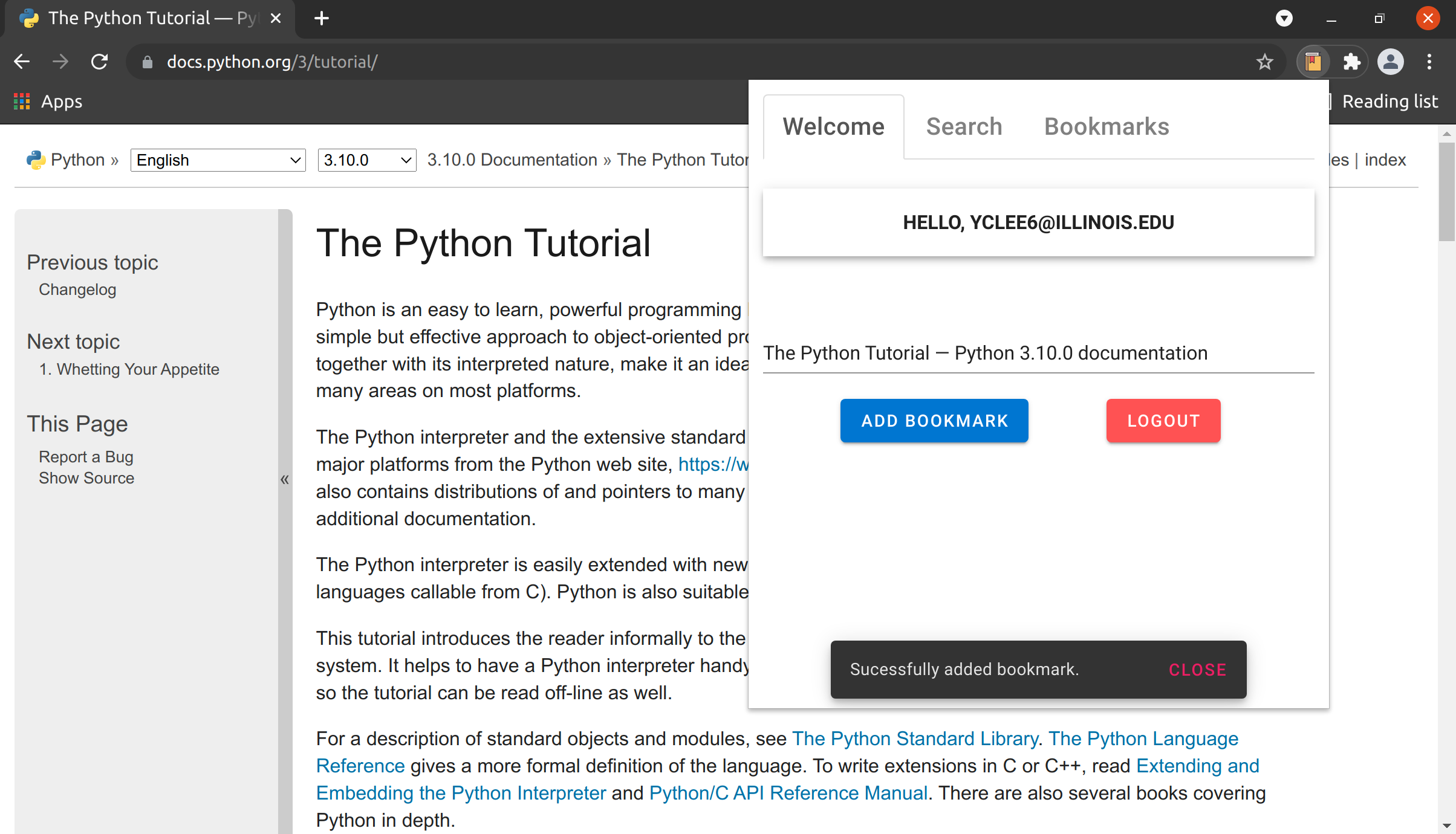

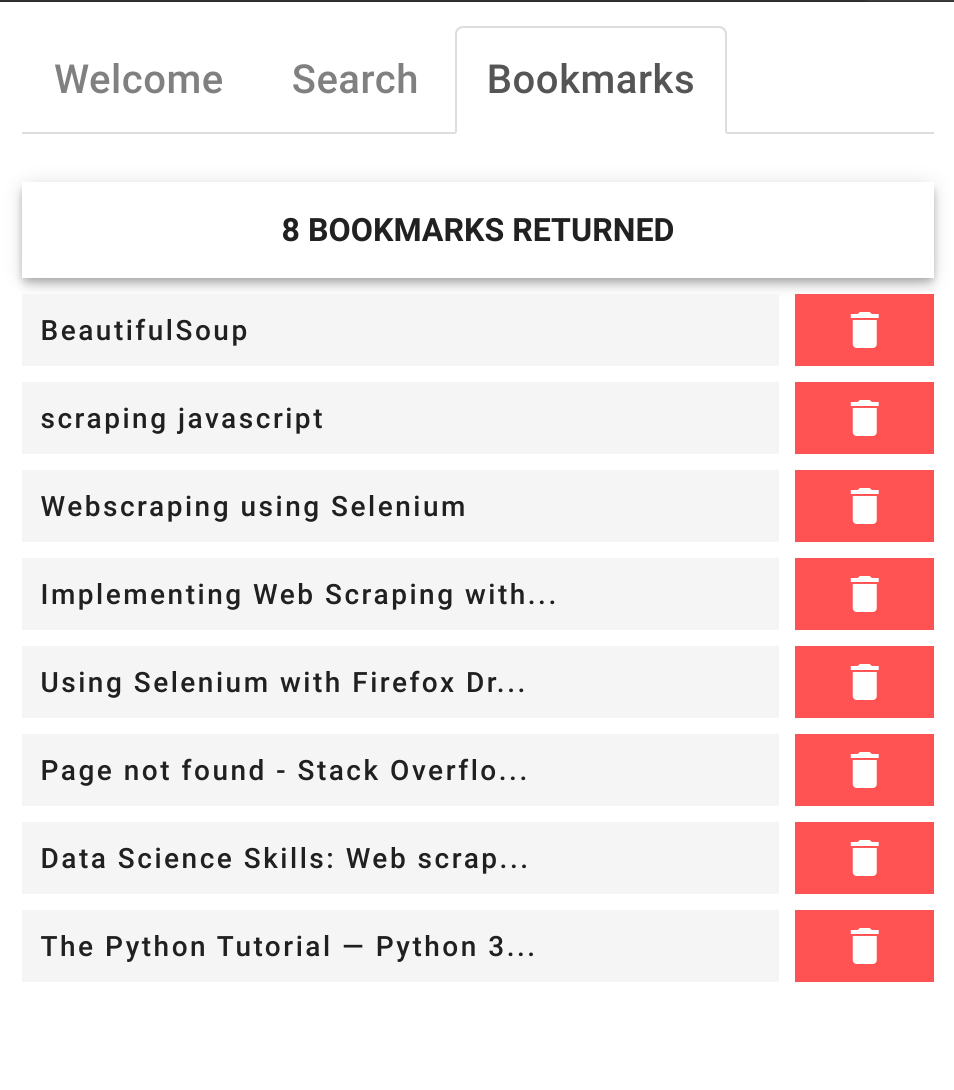

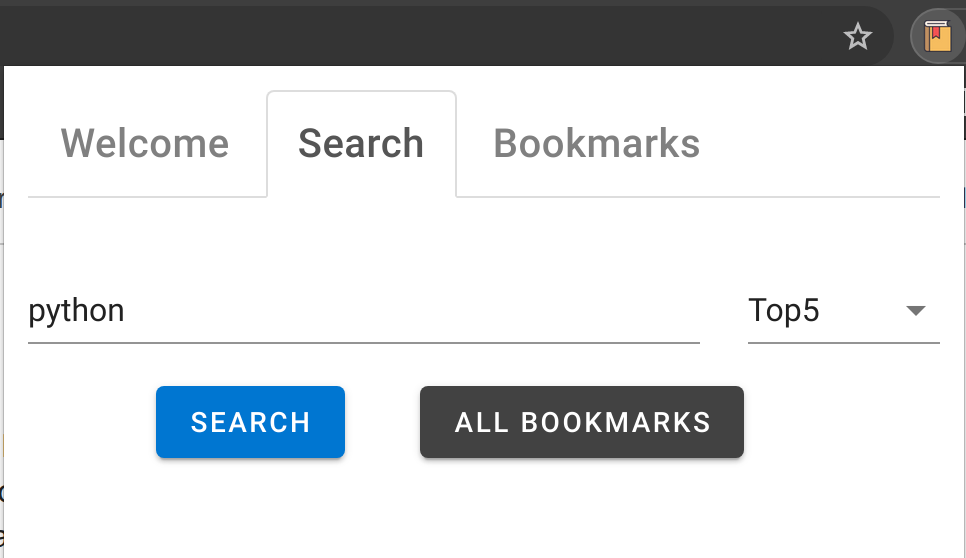

The frontend chrome extension is written in Javascript and it compromises of many GUI components boot-strapped using the VueJS framework. The user can interact with these components to perform various functions such as logging in, adding bookmarks and searching on bookmark contents.

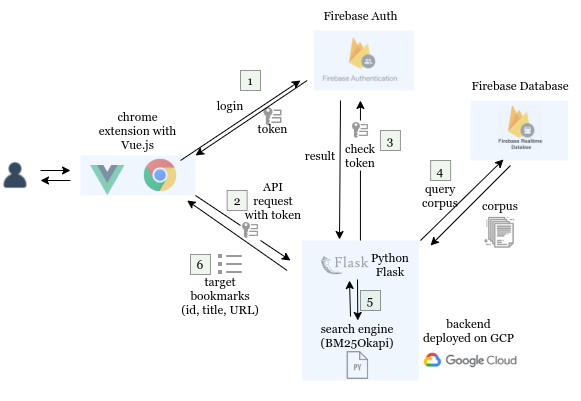

Architecture

Our project supported features such as login, adding a new bookmark, showing all bookmarks, search bookmarks, and delete bookmark. To achieve this, we have the frontend implemented in Chrome extension with Vue.js, the backend implemented with Python Flask, which is deployed on Cloud Run from Google Cloud Platform (GCP), and Firebase services helping us authenticate users and store the contents of users’ bookmarks.

User Management

We used Firebase Authentication1 to manage and authenticate users. When users log in, our frontend retrieves an ID Token for the user using Firebase Auth SDK. This ID Token serves as an authorization key for the user to use the services offered by our backend.

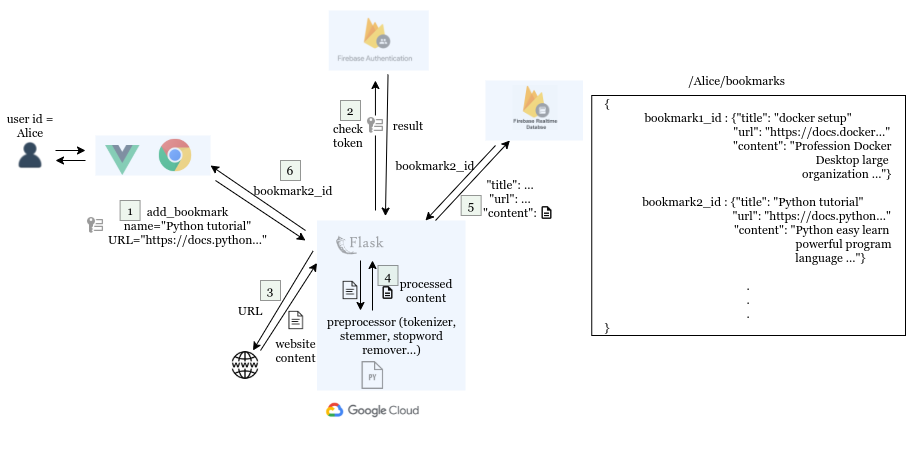

Bookmark Management

After a new bookmark request sent, the backend processed the content of bookmarked page and store them in the Firebase Realtime Database2. We used the Firebase SDK3 in our scripts, and then we can easily use the SDK to store data into or retrieve data from the database.

Processing

The processing of the content had several steps.

Crawling of Page

We crawled the page using a Python package bs4.BeautifulSoup. We retrieve the text of the HTML page using BeautifulSoup. However, our crawler didn’t support websites that generates contents using JavaScript.

Processing the Page Content

After the text of the website is crawled, we process the content in several steps.

- First remove all additional whitespace characters from the text, as well as make the content lowecase.

- Remove all stopwords4 from the content.

- Tokenize the full content and return a list of words.

- Remove special characters (such as HTML tags) from the words.

- Perform stemming on each words using Porter Stemming5.

Several Python packages were used to do these processing. gensim is used to remove stopwords, and nltk is used to tokenize the words and stem the words.

Searching

The search request is processed using a function that returns the top N results. We get the scores of the query with the BM25Okapi6 algorithm implemented in the Python package called rank_bm257. Given a query \(Q = q_1q_2 \ldots q_n\), the BM25 score of a document \(d\) is defined as follows:

where \(c(q_i,d)\) denotes the word count of \(q_i\) in document \(d\), \(k_1\) and \(b\) are free parameters, \(\|d\|\) denotes the length of the document \(d\), \(avgdl\) is the average document length in the corpurs, \(\|C\|\) is the number of documents in the corpus \(C\), \(df(q_i)\) is the number of documents that contains the term \(q_i\). After all the scores of the documents in the corpus were calculated, the first top \(n\) results would be returned by the function.

Deployment

We deployed our backend in Cloud Run (Google Cloud Platform) as a dockerized container. We created a project in GCP and enabled the Cloud Run service. We have also created a Dockerfile to deploy in Cloud Run8. By leveraging the Cloud Run and Firebase, our app is truly serverless, autoscaled and load-balanced by default.

Firebase Authentication Documentation https://firebase.google.com/docs/auth ↩

Firebase Realtime Database https://firebase.google.com/docs/database ↩

Firebase Realtime Database module https://firebase.google.com/docs/reference/admin/python/firebase_admin.db ↩

What are Stop Words? https://www.opinosis-analytics.com/knowledge-base/stop-words-explained/ ↩

The Porter Stemming Algorithm https://tartarus.org/martin/PorterStemmer/ ↩

Okapi BM25 Ranking https://en.wikipedia.org/wiki/Okapi_BM25 ↩

Rank-BM25: A two line search engine https://github.com/dorianbrown/rank_bm25 ↩

Build and deploy a Python service https://cloud.google.com/run/docs/quickstarts/build-and-deploy/python ↩